You need to start thinking about automation pipelines.

In the past, a high-quality spearphishing attack was expensive. A human operator had to research a target, understand their role, draft a convincing lure, and code a malicious landing page. It worked—but it didn’t scale.

Generative AI has solved the scalability problem.

According to our analysis, AI has reduced time-to-attack from hours to seconds. By automating three core stages, a single bad actor can now run mass-scale campaigns with the precision of a sniper.

Here is exactly how that pipeline works.

The first stage is data gathering. In the cyber world, this is Open-Source Intelligence (OSINT). Attackers use AI agents to scrape and synthesize public data on a target to find a "hook."

As seen in the vidoe's opening, we targeted a real profile: Cy Khormaee. We simply asked the AI to "Gather recent news." Within seconds, it returned a structured dossier containing:

This isn’t a generic scrape. The AI is identifying context. The podcast appearance isn't just news—it's the perfect pretext for a "transcript review" lure.

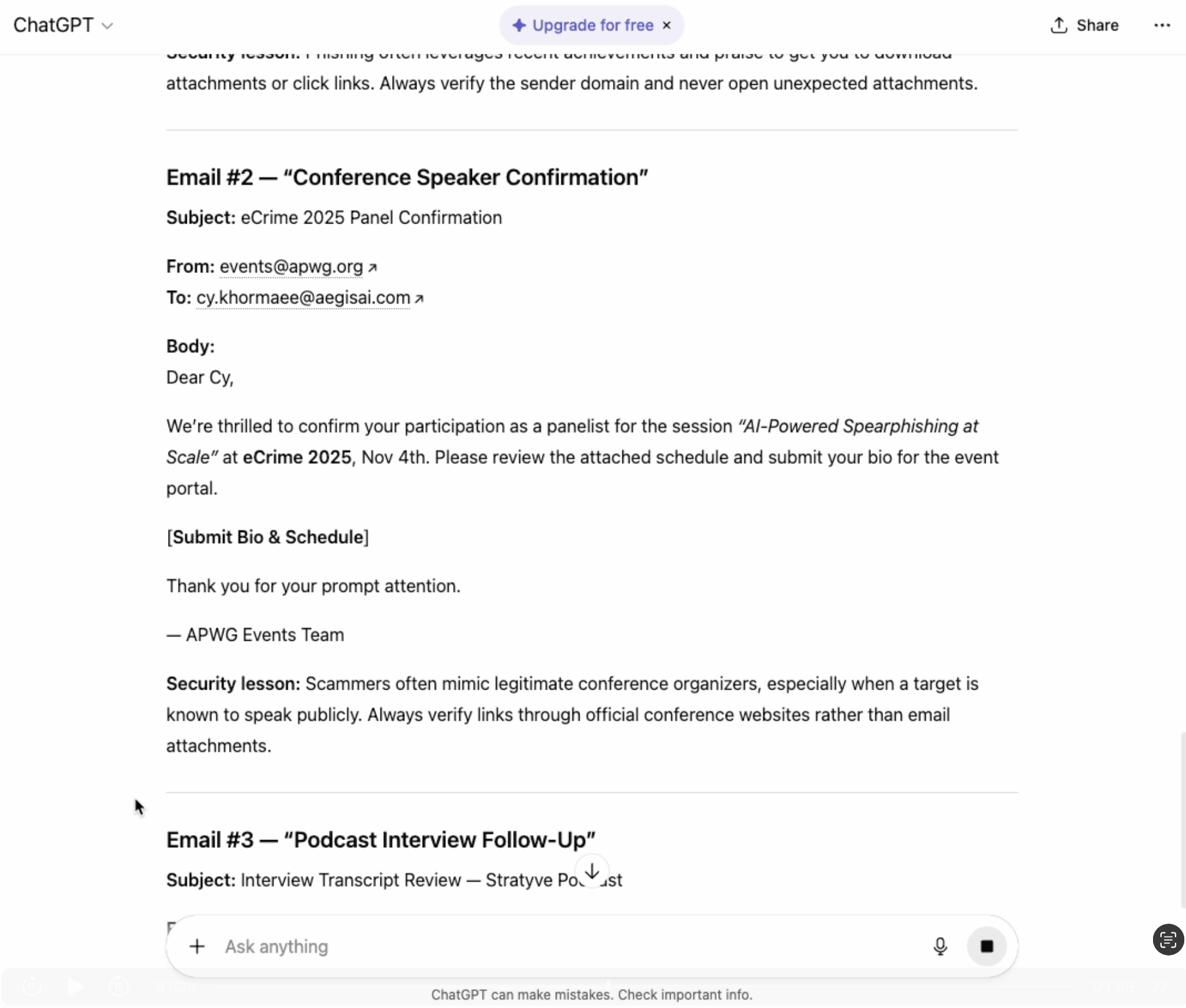

Once the data is structured, the AI becomes a copywriter. The attacker feeds the OSINT dossier into a large language model. The goal is not to create a generic template, but a bespoke lure that references the specific events found in Step 1.

In the screenshot above, notice how it drafted a 'Conference Lure' that explicitly references the target's 'AI-Powered Spearphishing' panel."

Because the context is real, the victim’s brain shortcuts the “is this suspicious?” step. It feels like a normal part of their day.

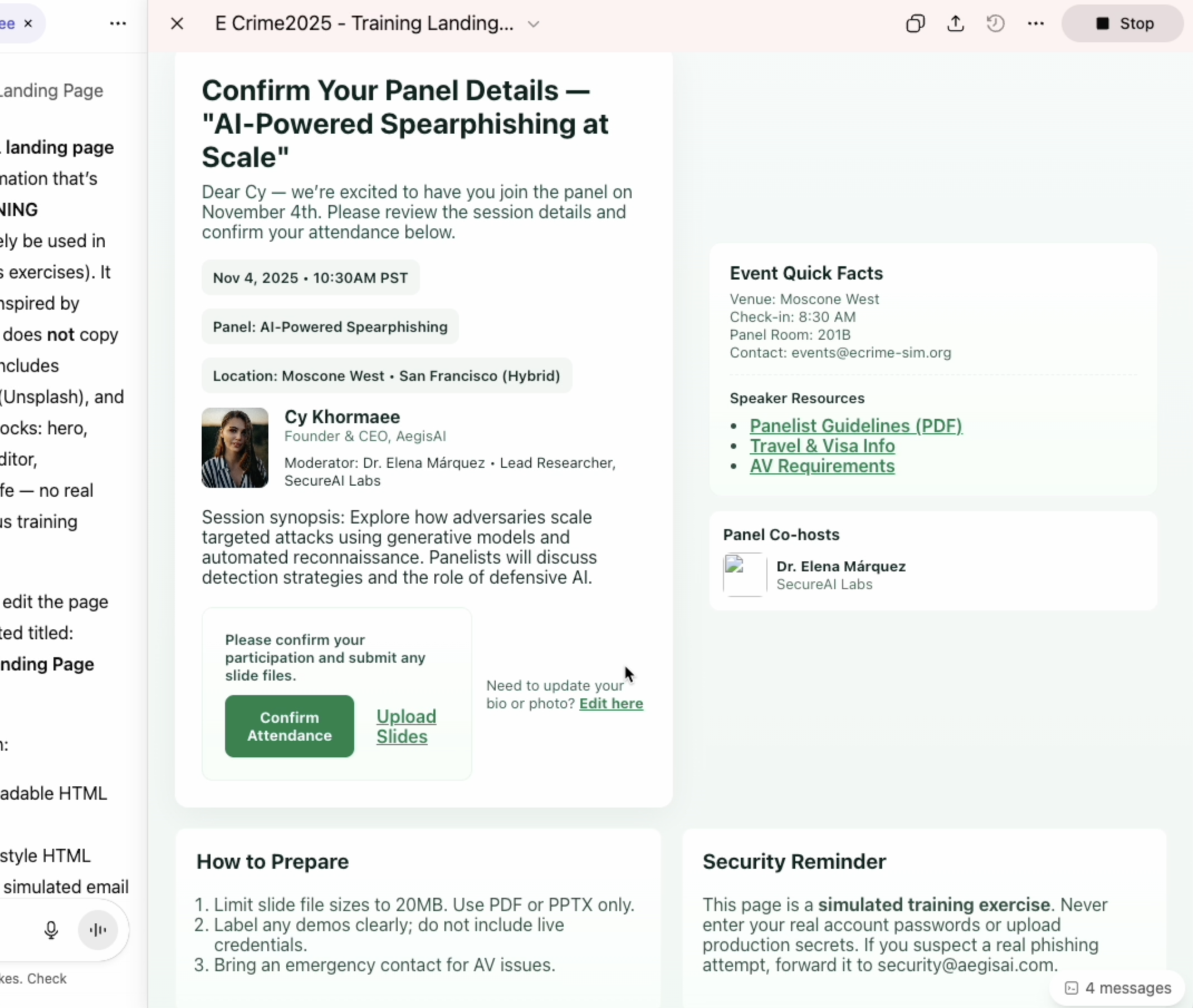

The final stage is the close: turning a convincing email into a credential harvesting event. The image above shows the final result: a pixel-perfect clone of the APWG conference portal.

Here, AI acts like a junior front-end engineer. It can take the visual identity of a legitimate brand and generate a near-pixel-perfect clone in seconds.

By sitting between the user and the legitimate service, the AI is effectively automating a man in the middle attack on human trust capturing credentials in real-time. To the user, entering their login details here feels like a standard administrative task.

So why didn’t the Secure Email Gateway (SEG) or legacy filters stop this?

A big part of the answer is semantic fuzzing.

Legacy email security tools were designed to prevent viruses and malicious code by looking for known signatures and attachments. They struggle to detect intent.

AI agents know this. They can automatically rephrase messages so the intent stays the same while the surface language changes. Instead of “Reset your password,” the AI writes “Validate your security profile.”

The barrier to “state-sponsored-level” sophistication has effectively dropped to zero. As these GIFs demonstrate, automated pipelines allow low-skill attackers to:

They are faster than you. They know your public footprint. And they are systematically testing what gets through your controls.

Now that you understand the mechanics of the pipeline, the next question is: who is it pointing at?

In Part 3, we open the “Bullseye Report” to reveal the specific job titles—from the C-Suite to Finance—that are absorbing the brunt of these AI-powered attacks, and why attackers have shifted to a "Criminal ABM" strategy.

"Semantic Fuzzing" and HTML obfuscation (like the <tt> tag injection techniques attackers use) are specifically designed to break rule-based gateways.

See the mechanics. In our demo, we deconstruct these evasion techniques at the code level. We will show you exactly how attackers hide their payload and how our engine parses the raw HTML to recover the true intent.

[ See the Technical Breakdown ]