The first place AI proves itself in security is not a flashy SOC copilot or an exotic hunting workflow.

It is email.

Email is still the front door for attackers. It is where social engineering slips in, where invoices get quietly rewritten, where “quick favor” messages land in the one distracted inbox that matters. And unlike a lot of security work, email outcomes are brutally binary: you either block the attack, catch it in time, or you don’t.

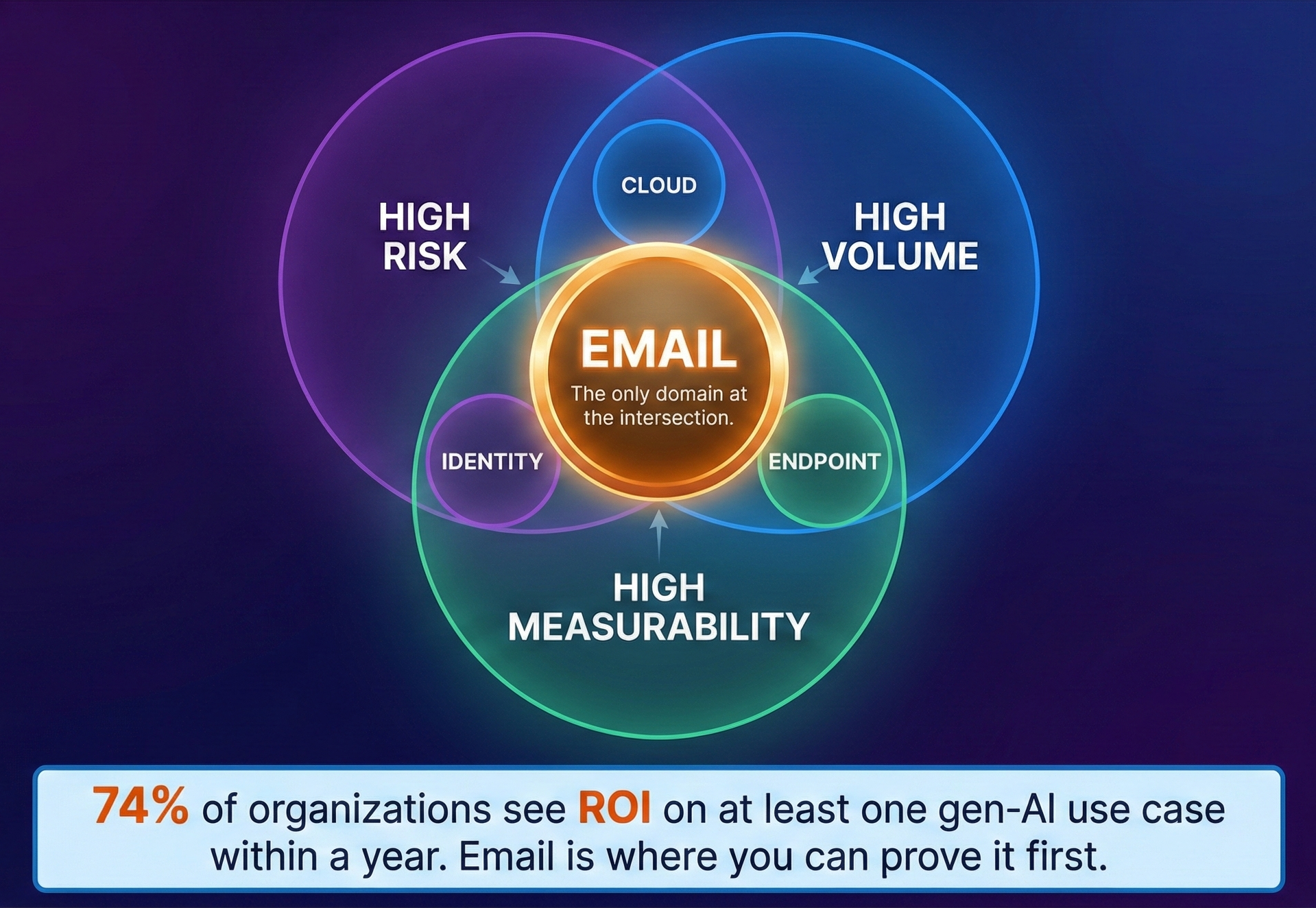

High risk, high volume, and highly measurable.

That combination makes email the proving ground for AI in security, and the place where ROI shows up in months instead of years. That lines up with the broader data: in Google Cloud’s 2025 ROI of AI in Security report, 74% of executives say they see a return on at least one gen-AI use case within the first year. Email is one of the fastest places to make that promise real because it is so easy to measure.

By 2025, the question is not whether AI belongs in security. It is where it is already earning its keep.

Google Cloud’s The ROI of AI in Security report, based on more than 3,400 senior leaders, shows that AI agents have moved from experiments into core operations. Among organizations using AI agents:

AI is no longer just running in a sandbox. It is sitting inside production workflows.

Zoom in and the outcomes are consistent: faster incident resolution, fewer tickets landing on human queues, and better identification of sophisticated threats that legacy tools miss.

If you are a CISO, VP of SecOps, or security architect, that is the level you are held to. Not “are we using AI?” but “is it helping my team get to better decisions, faster?”

In our work at AegisAI, when you follow those questions down into day-to-day work, you end up in the same place almost every time:

The inbox.

• 46% of enterprises using AI agents have deployed them for security operations.1

• 67% of early adopters report improved security posture from gen-AI.1

• 74% see ROI on at least one gen-AI use case within a year.1

Email is almost unfairly well suited to an AI agent in the loop.

It is still where many bad days start. Phishing, business email compromise, supplier fraud, and credential harvesting often begin with a single message. Attackers can iterate quickly, use the same models defenders rely on, and focus on a small group of high-value people: the CFO, the AP specialist, the overextended IT admin. A small improvement in email defenses can prevent a disproportionate amount of financial and operational damage.

The volume is relentless. Security teams do not deal with a handful of suspicious emails. They see thousands of alerts from their email and security stack, a steady flow of “this looks weird, can you check?” from users, and near-identical campaigns that repeat across regions and subsidiaries. Static rules and blocklists cannot keep up. This is exactly the kind of pattern-heavy, context-dependent problem where AI tends to perform well.

The artifacts are rich. Every message carries content (subject, body, tone, urgency), context (who normally talks to whom, about what, and when), technical signals (SPF, DKIM, DMARC, domain age, sending infrastructure), and behavioral history (who replies, clicks, or reports). You are looking at language, identity, and behavior all at once, which is the kind of surface area modern models can make sense of.

And the ROI is unusually clear. With email you can count how many malicious messages are blocked or clearly flagged before inbox, how many tickets arise from suspicious messages, how long it takes to triage user reports, and how many fraud or account takeover events trace back to email as the initial vector. Once you add an AI layer, you can ask simple questions every month: did ticket volume go down, did triage time shrink, did successful BEC or invoice fraud attempts drop?

Those are not abstract “AI metrics.” They are operational numbers your organization already understands.

When people hear “AI for email security,” they often picture a black box that consumes messages and spits out a yes or no.

The systems that survive in real SOCs do not look like that.

In practice, the agents that actually earn trust follow a much more human pattern:

Detect → Explain → Act

This is the loop we keep returning to at AegisAI.

Detect is about finding the right kind of “weird.” Not just obvious malware or typo-heavy phishing, but subtle vendor impersonation, quiet bank detail changes in the middle of a long invoice thread, a new sender that looks almost exactly like a C-level account, or the combination of urgency and access requests that often precedes fraud. The goal is not more alerts; it is better alerts that represent real business risk.

Explain is about showing why something is suspicious in a way an analyst would recognize. A label like “phishing, high risk” plus a confidence score forces humans to redo all the reasoning. Instead, the agent should be able to highlight the lookalike domain registered this week, the sudden introduction of new payment instructions in a thread that has never discussed billing, or the way the language matches known BEC playbooks. When the explanation matches an analyst’s instincts, they move faster and are more willing to trust the system.

Act is where the agent stops being a scoring engine and starts changing outcomes. Early on, those actions are safe and reversible: soft-quarantining suspicious messages instead of delivering them, adding clear banners that remind users to verify certain requests out of band, routing user-reported messages into smarter buckets, or opening a single enriched case for a campaign instead of dozens of near-duplicate tickets. As confidence grows, teams can dial up automation on well-understood patterns, with a clear path to override decisions when humans disagree.

If you look past the marketing copy and into live deployments, the earliest AI gains in email tend to show up in the work analysts complain about the most.

User-reported phishing triage is usually first. In many organizations, analysts spend a lot of time answering the same question in different forms: “Is this vendor email just spam, or is it dangerous?” An AI agent that can cluster similar reports, discard obvious noise, and surface the handful of genuinely risky cases gives those hours back and reduces burnout.

Alert enrichment and prioritization is another early win. Without AI, most alerts look similar in the queue. An analyst has to pull context to figure out who the target is, how they relate to the sender, and what might happen if the email is real. With AI in the loop, that context can arrive pre-assembled as a short narrative: “Likely BEC against Finance, impersonating a known supplier, requesting a bank detail change under time pressure.” That is a very different starting point from “suspicious email, see headers.”

Campaign deduplication is the third big one. A single phishing or fraud campaign can hit hundreds or thousands of inboxes. Traditional workflows often generate one ticket per message, and analysts end up investigating the same threat over and over. A good email AI agent can identify that these messages are part of the same pattern and collapse them into one enriched incident. The team investigates once, not a hundred times.

The broader data reflects the same story. Among organizations that have gone deep on agentic AI, 65% report reduced time to resolution and 58% report a reduction in security ticket volume. That is exactly the kind of change teams feel first in email: fewer low-value tickets, faster decisions, and clearer identification of dangerous patterns.

When organizations start exploring AI for email security, the conversation often jumps straight to platform decisions and rip-and-replace plans.

A better approach is to treat it like any other product experiment.

Start by choosing one or two metrics that actually hurt today. Maybe it is the time it takes to triage userreported phishing, or the total number of email-related tickets the team has to touch every month. The important part is that you already care about these numbers.

Next, put an AI agent in the loop for those workflows only. Let it cluster and prioritize user reports. Let it enrich and summarize alerts before an analyst sees them. Keep all actions reversible so no one feels locked in.

Then run a short, honest comparison. Over a few weeks, look at baseline versus AI-assisted triage times, ticket volume and duplication, and how analysts feel about the change. Are they saying their day is smoother, or that they are babysitting a noisy model? Independent TEI work on AI-driven security operations has shown investigation and response times improving by 50–65% over a few years. Email is where you can test for that kind of improvement on a smaller, safer surface area before pushing AI deeper into the stack.

Finally, scale what clearly works and stop what does not. Treat the agent the way you would treat a new human hire in the SOC: start with a narrow set of responsibilities, give it feedback, and increase its autonomy as it proves itself.

AI in security is real, but the impact is not evenly distributed across the stack.

Email stands out because it is where the business risk is obvious, the pain is acute, the data is rich, and the metrics are unambiguous. If you need to prove that AI can actually improve your security posture, not just your story in a board deck, the inbox is the most natural place to start.

Budgets are already shifting in that direction. On average, organizations allocate roughly a quarter of their IT spend to AI, and early adopters of agentic AI push that closer to four in ten, with at least half of their future AI budget earmarked for agents. The money is there. The question is where you can show value soonest.

At AegisAI, we have built our platform around a simple belief: email is where AI security ROI shows up first.

Detect, explain, act, with guardrails and measurement from day one.

If you are exploring AI for email security and want to talk through how to measure ROI in your own environment, we are always happy to share what we are seeing in the field.

Book your demo with AegisAI today: https://www.aegisai.ai/book-demo

Sources: