For the last decade, the cybersecurity industry has rallied behind a single mantra:

“Humans are the last line of defense.”

We built the idea of the Human Firewall. We poured the budget into phishing simulations, awareness videos, and LMS modules. The strategy was straightforward: technology might miss a threat, but a vigilant, well-trained employee would catch it. Someone would spot the red flags.

That strategy was designed for a world that no longer exists.

Our research shows that the arrival of generative AI has quietly broken many of the assumptions behind traditional training programs. By stripping out the very signals employees were taught to look for, AI has turned “user vigilance” from a safety net into a stress test.

The answer is not to abandon people. It is to redefine their role. We don’t need employees to act as firewalls. We need them to act as a context layer.

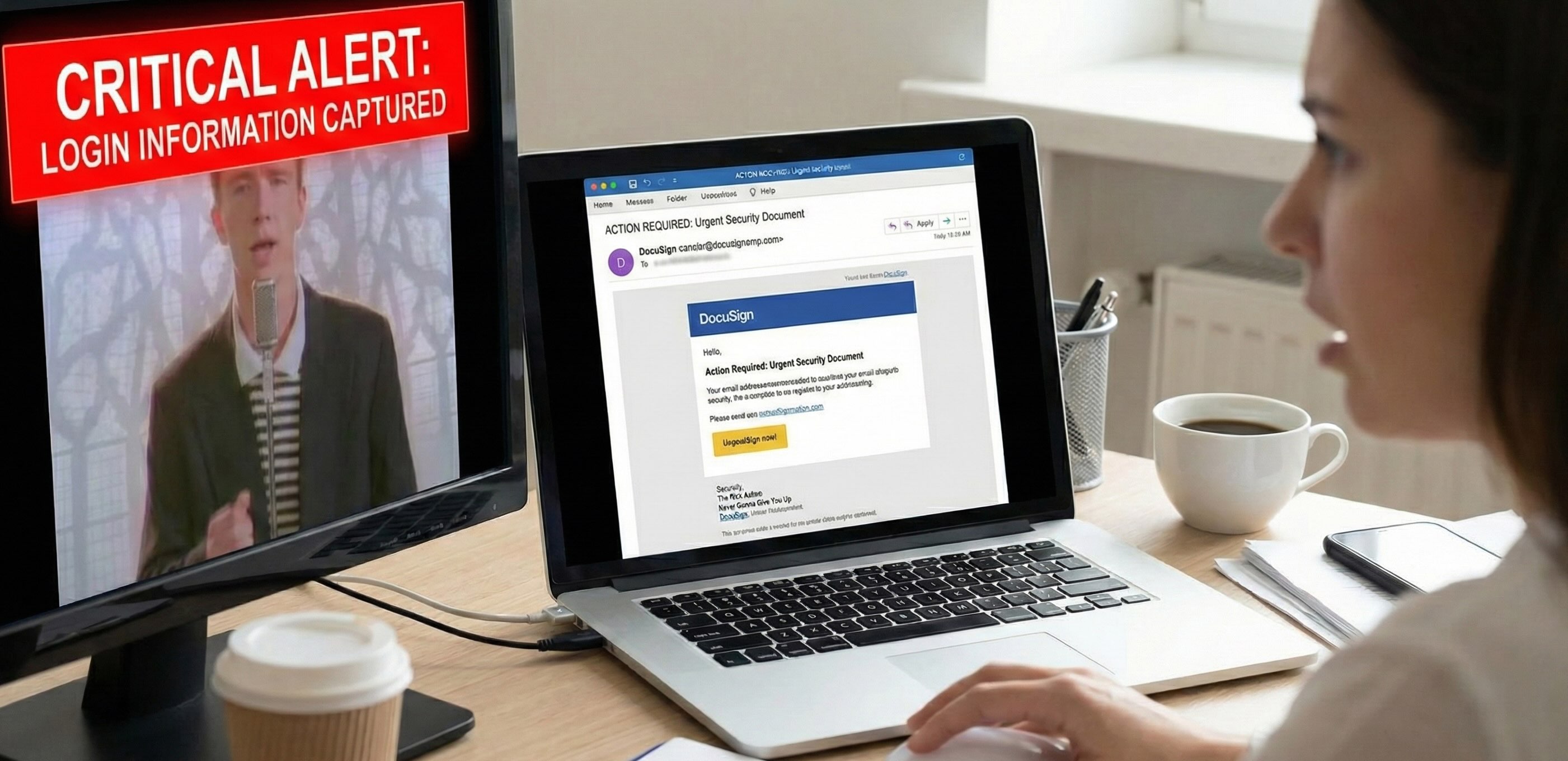

Traditional security training conditions the human brain to look for visible errors. Most programs still revolve around the same checklist: typos and awkward grammar, generic greetings, and sloppy formatting.

Employees are told to be suspicious of phrases that sound off, like “Kindly do the needful,” or messages that open with “Dear Valued Customer.” They are shown examples of pixelated logos, mismatched fonts, and inconsistent layouts. The implicit promise is that if they slow down and look carefully enough, something on the surface will give the attack away.

AI has patched every one of those suspicious phrases or “bugs.”

In our data, AI-generated attacks systematically remove the perceivable errors that training relies on. The grammar is native-level. The tone matches a real colleague or vendor. The layout is clean and often mimics the target’s own brand style.

When an email has been mathematically polished, “look closer” is no longer a useful instruction. There is nothing obviously wrong to find.

So if spotting typos and awkward phrasing is no longer viable, what is left for humans to actually do?

Once the surface is clean, the only remaining signal is context.

Generative AI is extremely good at imitating style. It can sound like your CEO, your head of finance, or a trusted vendor. What it does not have is any native understanding of your internal reality: what has already been signed, who normally sends which requests, and how your teams genuinely work day to day.

That mismatch is what we call the Context Gap.

Consider a seemingly benign message:

“Hi Cy, regarding your session at the APWG summit next week, please review the attached updated speaker agreement.”

There are no typos here. The grammar is perfect. It references a real event. It sounds like a normal piece of conference logistics.

The only person who can see the gap is the recipient. They might silently think: “I already signed that agreement yesterday.” Or, “The organizer always emails me from an @apwg.org address, not this sender.” Or, “We never email contracts as attachments, we always use a signing portal.”

That lived context is the one advantage humans still have over algorithms. They know the real state of their world. They can tell when something doesn’t fit the pattern of their actual workflows, even when the text itself looks fine.

The problem is that we are not designing our defenses to help them use that advantage.

Instead of empowering employees to use context, we have put them in a cognitive trap.

As we discussed in Part 3, attackers are now running criminal ABM attacks against specific roles like C-suite, Sales, and Finance. Those roles are built around speed and trust.

Executives are paid to approve decisions fast enough that the business does not stall. Sales is paid to respond to strangers, reply quickly, and click links if it keeps a deal moving. Finance is paid to process invoices, update payment details, and hit payment deadlines.

Then security shows up and says: “Never trust unexpected emails. Never click suspicious links. Double-check everything.”

We have created a game no human can win: move faster every quarter, but never make a single mistake.

Expecting a busy employee to manually verify the context of every message is not realistic. They do not have the time to cross-check calendars, confirm invoice numbers, and call vendors as a matter of routine. Your processes are not built to tolerate that amount of friction in every workflow. At scale, the instruction “always verify out of band” simply collapses.

Even when an employee does slow down and look carefully, attackers can still manipulate what they see.

One of the evasion techniques we highlight in the report is HTML character obfuscation. In this pattern, attackers insert invisible tags inside sensitive words. To a scanner, the word becomes broken or noisy. To a human, it looks normal.

For example, a payload might contain something like:

pa<span style="display:none">XYZ</span>sswordTo the Secure Email Gateway or content filter, that string is not a clean “password” token. It is a strange sequence of characters that may not match a simple rule. To the human in a browser, it renders as a clean, readable “password.”

Tools see junk. Humans see clean text.

Combine that with the fact that the email has already passed through a SEG without incident, and the employee’s mental model is obvious: “If it was really bad, the system would have blocked it.”

We have engineered a situation where we have overloaded, rushed, and under-supported humans who used to see the threats clearly.

AI-powered spearphishing forces a hard admission: if your strategy depends on an accountant, salesperson, or executive outsmarting a supercomputer after a ten-minute training once a quarter, you have already lost.

We cannot ask humans to fight algorithms with their bare hands.

If we want employees to function as a true context layer, we need to give them systems that carry the heavy load and surface only the decisions they are uniquely qualified to make.

In other words, we need semantic defense controls that understand context and intent, not just surface form.

The paradox is that the same statistical patterns that make AI-generated text so convincing also make it detectable, if you know what to look for.

In our final installment, Part 5, we’ll show how Semantic Defense battles AI-powered spearfishing. By leaning into the predictability of AI-generated language and the structure of criminal workflows, we can catch the phish that humans cannot see on their own and support employees as a true context layer instead of an overworked last resort.

If you want to go deeper into the data and examples behind this series, you can download the full research paper: [Download the Full Report: AI-Powered Spearphishing at Scale]