For decades, security teams relied on a simple truth: phishing was a volume game. Attackers sent millions of generic, poorly worded emails, hoping for a tiny fraction of victims to slip up. Because the quality was low—riddled with typos and generic greetings like "Dear Valued Customer"—our defenses could spot them.

That playbook is gone.

To understand this shift, we have to look beyond the traditional spear phishing meaning. In the past, "spear phishing" implied a manual, labor-intensive process reserved for high-value targets. It was distinct from standard email phishing, which was broad and indiscriminate.

But AI has collapsed this distinction.

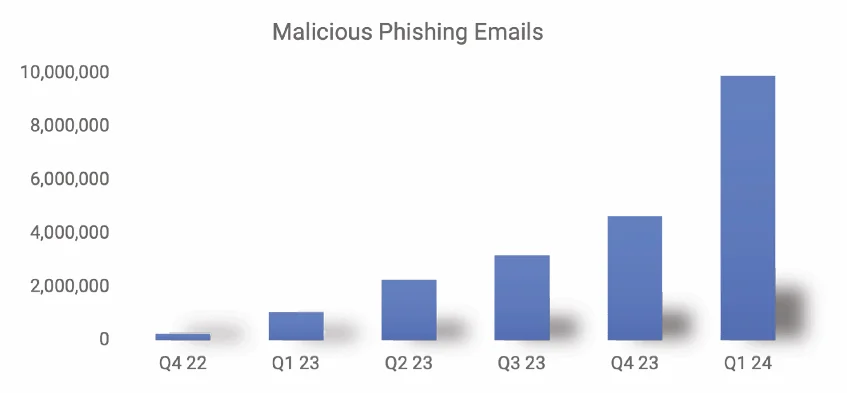

According to our latest study of 1,921 malicious emails, the threat landscape has undergone a fundamental transformation. Since the public launch of ChatGPT, security researchers have reported a staggering 4,151% increase in phishing attacks1.

This isn't just a spike. It is a shift in the business model of cybercrime.

To understand the explosion in volume, we must stop viewing hackers merely as thieves and start viewing them as competitors launching a product line.

If you were the "Head of Growth" for a criminal enterprise, Generative AI is your obvious Go-To-Market accelerator. It solves every bottleneck in the “crime supply chain.”

Historically, whaling phishing—attacks targeting C-suite executives—required weeks of research. A human operator had to study the victim, learn their writing style, and map their relationships. It was effective, but it didn't scale.

Today, Large Language Models (LLMs) can replicate that level of personalization instantly. They can ingest a target's LinkedIn profile, mimic their company's tone, and generate a bespoke lure in seconds. The result is that the high-effort tactics of whaling phishing are now being applied at the volume of spam.

This economic reality is driving an adoption rate that mirrors the enterprise migration to Cloud and SaaS in the early 2010s .

We are currently in the steep middle of the S-curve. Adoption of AI tools by criminals is no longer speculative; it is proven but the market is far from saturated.

For CISOs, this dictates a specific planning horizon: you must assume continued, step-function growth for the next 2–3 years.

As Cy Khormaee, Founder of AegisAI, notes in the report:

"The 100% growth rate is not just a projection; it is the logical outcome of a revolutionary technology being adopted by a market that is highly motivated by profit."

What does this "product growth" look like in your inbox? It looks like hyper-specialization.

Our analysis shows that 10.6% of malicious emails are already AI-generated. But the AI isn't just writing generic spam; it is powering specific, high-trust attack vectors that are seeing explosive year-over-year growth:

The result of this "product improvement" is clear: User click-rates on phishing emails have increased by 190% in 2024. The AI has effectively optimized the conversion rate of the attack by eliminating the typos and grammar errors that used to be our primary defense.

This trajectory demands an immediate shift in defense strategy.

So, how exactly are they doing it? It’s not magic. It’s a repeatable, three-step automated process. In Part 2 of this series, we will tear down the Anatomy of an AI Attack, showing exactly how an algorithm turns a LinkedIn profile into a weaponized lure in seconds.

The growth of AI phishing isn't hypothetical—it's likely already in your employee's inboxes.

You’ve seen the statistics. Now see the defense. Request a personalized demo to see exactly which "product lines" (BEC, Smishing, or Credential Theft) are bypassing your current filters, and how Semantic Defense stops them.