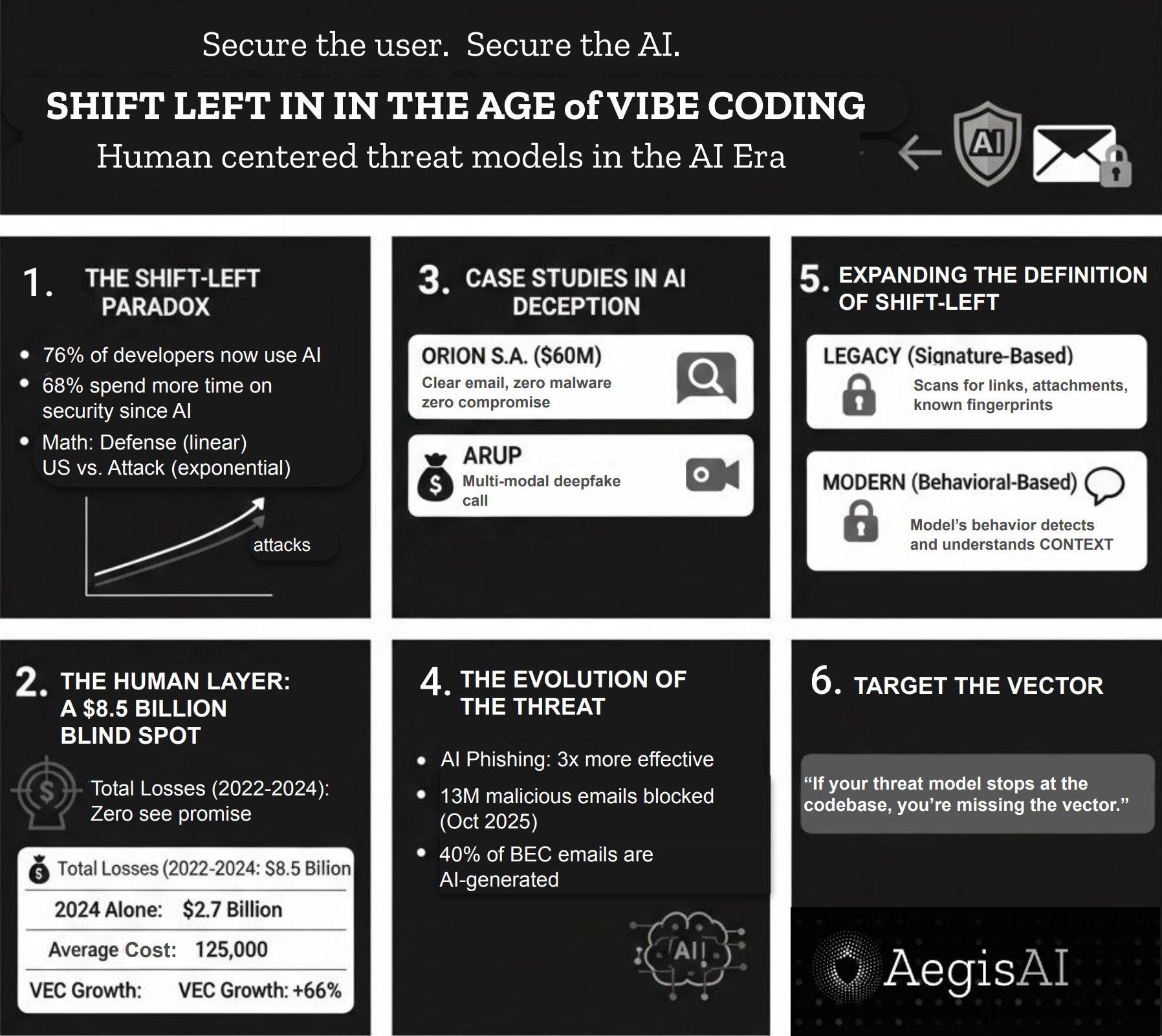

The widening gap between AI-native output and security oversight keeps me up at night. Organizations are shipping code orders of magnitude faster than ever before. Attack surfaces grow exponentially. Defensive capabilities grow linearly. This math doesn't work.

We've spent years preaching "shift left", to embed security earlier in the development lifecycle, catch vulnerabilities before deployment, and make security everyone's job. The philosophy is sound. The implementation is lagging.

According to Stack Overflow's 2024 survey, 76% of developers now use AI to help write software. Meanwhile, attackers have figured out something the security industry seems reluctant to acknowledge: why break through code when you can walk through the inbox?

DevSecOps promises comprehensive protection. Automated security testing, real-time threat intelligence, AI-driven vulnerability detection—all integrated into CI/CD pipelines. On paper, it's bulletproof.

On the ground, it's a different story.

AI-generated code carries every risk of third-party code: injection vulnerabilities, memory management problems, insecure dependencies, secrets management failures. A recent Checkmarx study reveals something counterintuitive: 68% of developers now spend more time resolving security vulnerabilities than they did before adopting AI-generated code.

We promised acceleration. We delivered technical debt at scale. And while security teams drown in code review, attackers have already breached the Microsoft 365 perimeter—not through exploits, but through persuasion.

Consider the position of today's developers. They coordinate agent networks, orchestrate automated workflows, ship features at speeds that would have seemed impossible five years ago. This velocity represents genuine technological progress. It also concentrates unprecedented power in human judgment—which remains as fallible as ever.

In August 2024, Orion S.A. disclosed to the SEC that an employee had been deceived into wiring $60 million to attackers.

Zero systems compromised. Zero malware deployed. An attacker impersonated a trusted figure. The employee followed instructions.

The email security gateway saw nothing suspicious because there was nothing technically malicious to detect. No links. No attachments. Only language carefully engineered to trigger action.

$60 million evaporated because business email security failed at the human layer. This is the modern business email compromise: the most devastating attack in your threat landscape arrives disguised as the most boring email in someone's inbox.

Business email compromise accounted for nearly $8.5 billion in losses reported to the FBI between 2022 and 2024. In 2024 alone: $2.7 billion stolen. These aren't sophisticated zero-day exploits. They're social engineering attacks weaponizing a single vulnerability: trust.

The trend line is accelerating. BEC attacks increased 15% in 2025 compared to 2024. Forty percent of BEC phishing emails are now AI-generated. Attackers use the same tools we do—they just use them first.

Average cost per incident: $125,000. But the outliers are catastrophic.

In early 2024, a finance worker at Arup—the British engineering firm behind landmarks like the Sydney Opera House—received a suspicious email from the company's CFO requesting a "secret transaction." The employee's instincts were sound: this seemed wrong.

The attackers adapted. They scheduled a video call. On screen: the CFO. Other senior colleagues. Everyone discussing the transaction as routine business, with the casual familiarity of people who'd worked together for years.

Every single person on that call was an AI-generated deepfake.

Fifteen transfers. $25 million. Gone.

Rob Greig, Arup's CIO, later stated: "None of our systems were compromised and there was no data affected. People were deceived into believing they were carrying out genuine transactions."

This wasn't a technology failure in the traditional sense. It was a trust failure that no secure email gateway was designed to prevent. What began as classic BEC evolved into a multi-modal attack spanning email, video, and voice. Your email security saw a normal meeting invite. Your video platform saw authenticated users. The human saw the fraud—by which point the money had already moved.

Most enterprise-grade phishing prevention tools remain reactive. Traditional email security gateways were built to detect malicious payloads: infected attachments, bad links, known threat signatures. These tools still block mass-market attacks effectively. But BEC doesn't need payloads. BEC uses persuasion.

Microsoft's 2025 Digital Defense Report makes the shift explicit: AI-driven phishing is now three times more effective than traditional campaigns. BEC has evolved from manual, low-volume scams into a professionalized service economy. Access brokers sell stolen credentials to BEC operators who automate target selection and payment fraud at industrial scale.

The security tools catching up understand this evolution. They don't just scan for malicious signatures—they model behavior, detect anomalies, interpret context.

For organizations evaluating solutions, the questions worth asking are:

Does this platform understand behavior, or only signatures?

Can it detect emails that are technically legitimate but contextually suspicious?

How does it handle vendor email compromise, which rose 66% in the first half of 2024?

What happens when the attack spans email, video, and collaboration tools simultaneously?

The shift-left philosophy needs expansion, not replacement. Yes, secure your code. Yes, embed security into DevOps pipelines. Yes, use AI to catch vulnerabilities before deployment.

But recognize this: while your developers race to ship, attackers bypass your entire technical stack by exploiting the most vulnerable system in your organization—human decision-making under time pressure.

The Arctic Wolf 2024 Trends Report found that 70% of organizations were targeted by BEC attacks last year. Twenty-nine percent became victims. This isn't a code vulnerability. This is a trust vulnerability operating at the exact intersection where business velocity meets human judgment.

As AI accelerates both development and attack sophistication, the defensive window shrinks. Microsoft's threat intelligence team blocked over 13 million malicious emails linked to a single phishing platform (Tycoon2FA) in October 2025 alone. That's the scale. That's the velocity.

Shift-left must expand beyond catching bugs earlier in the SDLC. It must recognize that your attack surface extends far beyond your codebase—into every inbox, every video call, every moment where an employee decides whether to trust a request.

Shift-left is necessary. It is not sufficient.

If you're investing millions in DevSecOps while your email security still relies on legacy signature-based detection, you've built a fortress and left the front door open. If your threat model stops at the codebase, you're missing the vector that just cost Arup $25 million and Orion $60 million.

AI cuts both ways. Your developers use it to ship faster. Attackers use it to craft emails that pass every technical filter while triggering every psychological one. The question isn't whether to use AI for defense. The question is whether your AI can learn faster than theirs.

Email remains the primary attack vector—not because of technical sophistication, but because of psychological effectiveness. BEC attacks don't need malware when they have manipulation. They don't need exploits when they have urgency, authority, and the appearance of legitimacy.

Effective email security platforms understand context, not just content. They recognize that an email from your CEO at 2 AM requesting an immediate wire transfer to a new account warrants investigation—even if every character is technically legitimate, even if the domain is correct, even if the signature looks right.

The future is agent-based, behavioral detection. Static rules cannot keep pace with AI-generated threats that learn, adapt, and evolve with each campaign. Your defenses must learn and adapt at the same velocity—or faster.

We're building AegisAI to solve this specific problem: the gap between code velocity and human vulnerability. But the solution matters less than the recognition. Email security cannot be treated as a commodity line item. It must be recognized as what it is—a critical control point where business velocity, human psychology, and adversarial AI intersect.

While you shift left, the attackers are already inside your inbox. The question is whether you'll notice before the wire transfer clears.